A Gentle Introduction to the Self-Attention Mechanism

- Benjamin Chen

- 2024年4月17日

- 讀畢需時 4 分鐘

Acknowledgment: This blog post draws heavily from the invaluable teachings of Professor Lee Hung-Yi, a revered figure at the National Taiwan University. The explanations presented herein are largely inspired by and adapted from his insightful video on YouTube. I intend to consolidate and share the knowledge gleaned from his videos for those who may not comprehend Mandarin. If Mandarin is within your grasp, I encourage you to explore the original video here. To better understand the content of this blog, it’s also recommended that you have some fundamentals in neural networks.

What is self-attention?

Self-attention is a type of neural network architecture. In short, it takes in certain inputs (vectors) and outputs some other vectors. Before we go into how self-attention turns inputs into outputs, let’s establish some fundamentals and discuss a little bit about how vectors serve as inputs to a model.

Vector Set as Input

In certain tasks, we have to feed a vector set as input into a model. For example, if we want to feed a sentence into a model for an NLP task (eg. translation), the sentence, as a whole, will be encoded as a vector set. Each word would be represented by its vector.

There are many different methods of encoding words into vectors, the most straightforward method being one-hot encoding. Just imagine that each vector is the same length as the number of words that exist in this world. Then each word will be assigned an index where the corresponding value in the word’s vector is 1 (and all other values are 0). Below is an example of one-hot encoding. The downside of one-hot encoding for text/words is that the distance between the vectors of different words has no real meaning.

Another popular method of encoding words into vectors is called word embedding. The specifics of deriving the vectors via word embedding would be for another blog post, but essentially, word embedding encodes words such that the distance between the vectors of different words has semantic meaning. For example, since the word “dog” is semantically more related to “cat” than a verb like “eat”, the distance between the vector of “dog” and “cat” would be much smaller than that between the vector of “dog” and “eat”.

Ok, we’re getting a bit off-topic. Let’s pull back to our focus. We now know that a model often has to take in a set of vectors (the number of vectors can also vary) as its input. What about the output? Depending on the task, the output can be a single output, a fixed number of output, or an undetermined number of output. This is what I mean.

A Single Output

When we perform a task like sentiment analysis (eg. determining the sentiment of a feedback), the model outputs a scalar (a single value). The output can be either “positive” or “negative”. This should be quite easy to understand.

A Fixed Number of Outputs

In this case, the number of outputs will be the same as the number of input vectors. You can almost imagine that each output corresponds to an input vector. A task where this is the case is POS Tagging. Essentially, we are trying to assign each word to a particular part of speech.

An Undetermined Number of Output

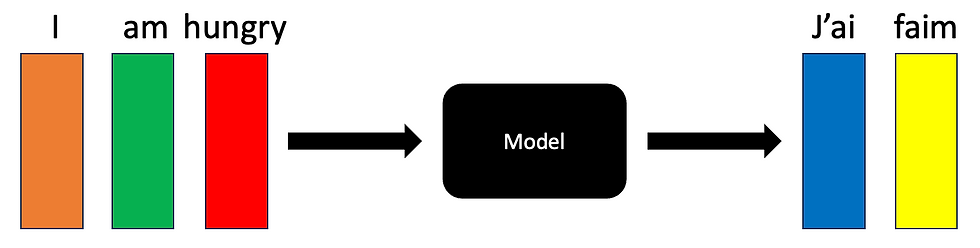

In the third scenario, we won’t know how many outputs the model will produce in advance (it will likely not be the same as the number of input vectors). We let the model decide by itself the number of outputs it should produce. An easy-to-understand example would be a translation task. When you translate a sentence from English to French, we wouldn’t know in advance how many words would be in. the translation. We let the model decide what’s best.

In this post of self-attention, we will be focusing on the second type of output where the number of outputs is equal to the number of inputs. This is also called Sequence Labelling.

Sequence Labelling

Instinctively, the simplest way we could assign each input vector to a corresponding output is to apply a fully connected neural network to each vector.

The problem with this approach is that each output depends solely on that one single input vector. This would be a major flaw in tasks like the previously mentioned POS tagging. In our previous example, we can see that the word “saw” has different meanings given the context. The first is a verb whereas the second is a noun. If we were to apply a neural network to each input vector separately, the outputs for the two “saw”s would be the same. In short, this method does not consider the context of the word (words that come before and after).

So what can we do so that we can take into account the nearby words? Another approach is to use a window. It’s very similar to the previous approach except each neural network takes in additional nearby input vectors.

This approach is slightly better than the first approach as it takes into account of more context. However, there is a limit to this approach — it still doesn’t consider the whole sequence. Anything that is outside of the window is not considered. It’s also hard to set an optimal size of the window. Remember that each sentence or sequences have a different lengths. If you were to just set the window length as the length of the longest sentence, it would likely result in a huge computation with many parameters.

So what can we do to address this issue? This is where we introduce self-attention.

Self-Attention

Self-attention outputs the same number of vectors as the number of input vectors but also considers the entire sequence of input vectors. Since each of the vectors outputted by the self-attention module considers the entire sequence of the input vectors, we can then feed them into a fully connected neural network to output a final value.

In the next chapter, we will dive into the black box of self-attention and explore how it turns input vectors into output vectors that consider the entire sequence of input vectors.

留言